HR leaders today are being asked to do more with less, while carrying responsibility for culture, compliance, and credibility. AI in HR has entered this moment with a compelling promise: faster answers, fewer errors, and relief from administrative overload. For many teams, that promise is real. But when AI starts crossing into decision-making territory, especially in sensitive or culture-driven moments, the risks grow quickly.

The future of HR and AI is not about replacing human judgment. It’s about using AI as a strategic partner that strengthens accuracy and efficiency, while keeping people, empathy, and accountability firmly with People teams.

AI for HR Teams: Escaping Manual Work Without Losing Control

For small to mid-market organizations, HR often becomes the connective tissue holding together disconnected systems, vendors, and processes. Manual data entry, reconciling records, and chasing answers leaves little room for strategic work. AI HR solutions can feel like a necessary escape from that grind.

At the same time, HR leaders are accountable for outcomes that cannot afford mistakes. As Tilt’s VP of Leave Compliance, Jon Nall explains that one of the biggest challenges is, “the volume and complexity of the leave compliance rules that just keep growing every year. HR is expected to be fast and empathetic and get it right every time…and mistakes can have a huge impact. They affect someone’s income, job protection, sense of security, and they expose the organization to real risk.”

AI can help manage this complexity, but pressure to move faster should never justify handing over decisions that require care, context, and trust.

Where AI Creates Risk Instead of Relief

The real risk for AI in HR is over-relying on it, not simply using it. Generic tools like ChatGPT are increasingly treated as a source of truth for complex questions. In HR, that can quietly introduce rigid processes, disjointed communication, and a loss of empathy.

As Nall explains, “The line is crossed when AI starts making or implying final answers in areas that require judgment or legal interpretation…They don’t understand an employer’s specific policies, workforce, or risk tolerance. Anything involving medical certification evaluation or nuanced eligibility questions really requires human review.”

When HR relies on AI to make judgment calls, employees can feel processed rather than supported. Trust erodes. Communication becomes transactional. Culture suffers, even if compliance boxes appear to be checked.

The Human Work AI Should Never Replace

There are areas where AI and HR should have clear boundaries. Empathy, judgment, and exception handling are not edge cases. They are core to the employee experience.

When an employee is navigating a medical issue, a family crisis, or a complex return to work, decisions rarely fit a clean template. Context matters. Intent matters. Culture matters. During these times, Nall shares that, “the law or a company policy might require some individualized assessment… Even when these rules are clear, humans are needed to weigh facts, intent, and impact together.”

These are moments where rigid automation can block compassionate, strategic decisions and break down trust in ways that are difficult to repair.

Leave Experience Management as a Practical Example

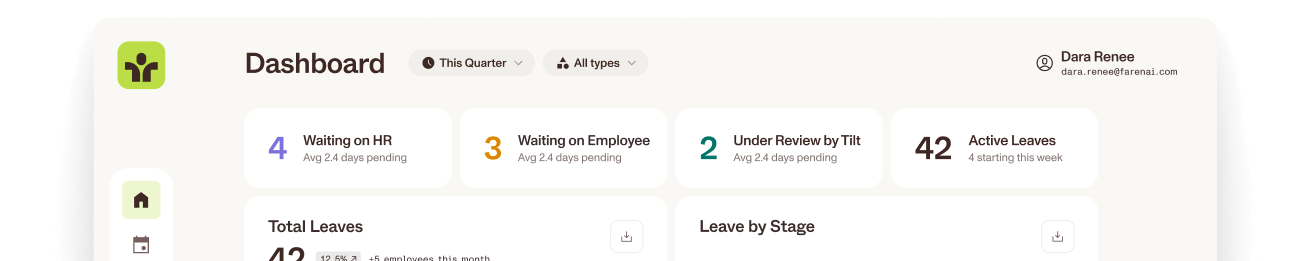

Leave Experience Management (LXM) shows what a healthy AI and HR partnership looks like in practice. AI handles the complexity behind the scenes by tracking timelines, flagging risks, and highlighting compliance gaps. HR retains authority over decisions, communication, and outcomes.

“The right partnership is where AI does the work that is best suited to technology… and then HR does the work that’s better suited for humans, evaluating the full picture and making the final call,” says Nall. “Allowing the technology to handle the complexity behind the scenes, frees up HR to focus on communication, empathy, judgment.”

When leave is handled this way, employees feel supported rather than processed. Decisions are explainable. Trust grows. Culture is reinforced instead of strained.

How HR Leaders Can Move Forward with Confidence

Responsible AI implementation starts small and intentional. The safest entry point is low-risk administrative workflows like tracking, reminders, and documentation. Decision-making should always remain with HR, supported by tools designed specifically for HR compliance, not generic AI.

The goal is not speed, it’s credibility. When AI reduces administrative burden and improves accuracy, HR gains time to focus on what matters most. Nall points out, “AI should support HR, not replace it. Let technology handle the organization and the visibility so HR can focus on judgment, empathy, and culture. The future is not AI versus HR. It’s AI and HR working together, each with clear roles and clear boundaries.”

When AI is treated as a strategic partner, HR becomes more human, not less. Processes run smoothly, compliance confidence increases, and the employee experience remains grounded in care, clarity, and trust.